Cornell and Adobe create software that lets you adjust your lighting after the shoot ends

posted Friday, August 23, 2013 at 5:43 PM EDT

Ever finish a photo shoot, pack up, then sit down in front of your computer and immediately find yourself wishing you could tweak your lighting? It's not a pleasant feeling, but clever software developed by researchers at Cornell University and Adobe could make it a thing of the past.

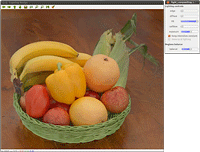

In a nutshell, the software allows you to merge multiple images of your subject, with only the lighting varying between shots, and then merge them to create a single image. The effect is similar to high dynamic range photography, but with a twist: you can effectively alter the lighting in your image after the fact.

If the idea seems familiar, that's because we've seen something pretty similar in the past. French software developer Oloneo already offers an app which allows post-capture lighting adjustment, but there are some important differences in its approach, versus that used by Cornell and Adobe.

Oloneo PhotoEngine's ReLight tool, which is nicely demonstrated in a video on the company's website, lets you mix your photos in such a way that up to six light sources can be adjusted. For each, you can control the brightness independently, as well as changing the color of the light cast. Because real photos are used to create the effect, it can be surprisingly convincing, casting shadows and reflections correctly across your scene.

The technique used by Cornell and Adobe, while superficially quite similar, works in an entirely different manner, however. For one thing, it doesn't appear to allow the tuning of light color temperature that's provided by Oloneo's software, although this could certainly be added in the future. Perhaps more importantly, though, it provides a lot more than the ability to just raise or lower the strength of individual light sources. Instead, you're provided with a range of control types, each set simply by moving a slider.

The Fill Light control aims to create a flat, evenly-lit image with no deep shadows or bright highlights. The Diffuse Color Light control, meanwhile, emphasizes subject color. An Edge Light control emphasizes high-contrast edges, while a Soft Lighting Modifier aims to reduce the harshness of shadows that were emphasized by the Edge Light control. Finally, there are Regional Lighting Modifier and Exposure controls, which adjust overall contrast and luminance. Any of these controls can be adjusted globally, or locally for individual subjects in the image.

Where things really get clever, though, is in the ability to adjust the effect for individual subjects within the scene. It isn't immediately clear whether subjects are located automatically or manually, but either way, you can control rendering of subjects individually -- much as you would have done in the studio by tweaking your lighting.

Of course, you could do all of this in Photoshop already, but the real advantage of Cornell and Adobe's creation is that it makes this process much quicker and simpler. In evaluation of the software, novice users were given stacks of a hundred or more images, shot tripod-mounted with a light moved around the room. Although their results didn't best what professionals could do in Photoshop, given 45 minutes to work on the stack, what the novices managed in five minutes or less was fairly impressive.

If you want to know more about the project and see what you can manage with the same original data, you'll find a paper from the researchers behind the project -- as well as the source image stacks -- on the Cornell website.

(via Reddit)