Computers vs. Humans: Which will win the battle to geotag your photos?

posted Friday, March 4, 2016 at 8:49 AM EDT

Chances are that if you look at one of your photos, you can remember where you shot it almost immediately. Take a look at someone else's photos without context, though, and you'd be left searching for clues to the location. You might think that asking a computer to identify locations from photo content alone would be a lost cause, but new research from Google shows that modern computing techniques can also manage surprisingly well at this difficult task.

Humans are already amazingly good at this sort of chore, relying on the knowledge we've gathered over our lifetimes to make a judgement call on what the photo is telling us. Even for a photo you've never seen before, there's a fair chance you'd at least be able to get close just from little hints like how people are clothed, what the vegetation looks like, which side of the road cars are driving on, and probably dozens of other such observations. If you're lucky, there might even be a notable landmark that lets you tie down the location more accurately.

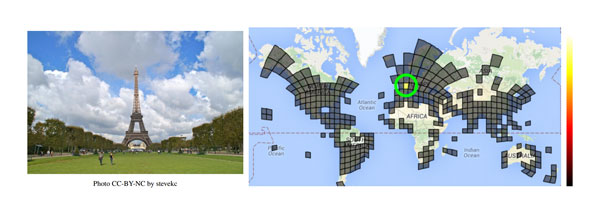

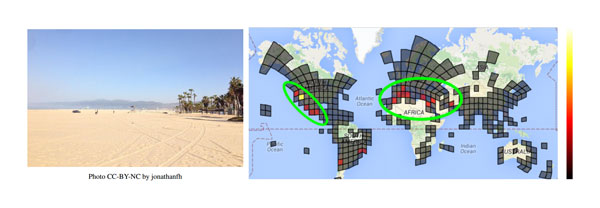

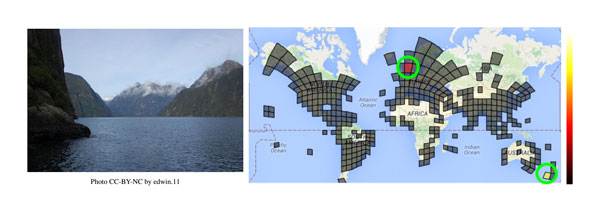

There are limits to how far this can take you, but they rather depend on the image itself and especially on how much impact man has had on the location. For example, take a look at the photos below. Most likely, you've never seen any of these exact shots before. Correctly guessing the location of the first shot is as easy as they come, but the others are rather tougher. Even for these, though, you can probably at least make educated guesses as to where they were captured. In fact, this is the underlying concept behind games like GeoGuessr, and they wouldn't be as popular as they were if we weren't pretty good at playing them.

As we said, we're starting off really simply here. Paris' Eiffel Tower is instantly recognizable to most of us, and we doubt you had too much difficulty identifying not just the continent and country, but even the city and (if you're familiar with Paris) the location within the city.

This one's quite a bit tougher, but there are still a fair few clues. For one thing, you know it's a coastal location from the vast expanse of sand. The palm trees are another hint, and so too is the design of the lifeguard station. A little closer to the foreground is a pickup truck, another hint that might lead you towards a particular country. (If you guessed California, incidentally, you'd be right.)

This last shot is really pretty difficult to guess, though. The rugged scenery is certainly beautiful, and you can get a hint of the climate from snow on some of the mountain peaks, but otherwise there's not a lot to go on. (Did you say New Zealand? If so, give yourself a pat on the back.)

But how well can computers manage to identify these same locations? The answer is pretty amazing: Given the exact images you just tried to identify, a neural networking model called PlaNet suggested locations for all three, and in each case the correct location appeared right at the top of the list. The image below, taken from a paper published by the team behind the research, shows the locations guessed by PlaNet for each image. (Note that for this particular example, the resolution of the model was decreased to make it easier to represent visually, though -- in the standard model PlaNet's guesses are apparently even more precise.)

If you want to know how it works (and you're feeling pretty technical), you'll find the entire research paper available here. In a nutshell, though, the algorithms behind PlaNet learn to identify surprisingly subtle clues in trying to determine the location for an image. According to the paper, these can include landscapes typical of different regions, as well as objects commonly found there, local architectural styles, and the flora and fauna specific to each region. And if that's not enough, PlaNet can also take context from other images included in the same gallery, reasoning that they were likely captured at around the same time and location.

When given a sample of 2.3-million geotagged images taken from Flickr -- and with the geotags not used to guess the location, obviously -- PlaNet is said to be able to correctly guess the continent almost half of the time, and the country nearly one-third of the time. For just slightly more than one in ten images, the algorithm can correctly identify the city, and for nearly four percent of images (or a little more than one in 30) it can even identify the location right down to the street level.

And even more impressively, when shown images from GeoGuessr and pitted head-to-head against 10 people who, according to the paper, were well-traveled (and hence probably better-equipped than most to take on the challenge), it was PlaNet's neural network that won, not the human subjects. PlaNet apparently won 28 rounds versus 22 rounds won by the humans. Not just that, but it was also far more accurate overall, coming within an average of around 700 miles of the correct location for its guesses, while the humans were off by an average of around 1,440 miles.

With Google as the company behind this research, we can't help but wonder to what it could lead us all. Automatic geotagging of images as they're uploaded to Google+ Photos, perhaps? The accuracy might not quite be there yet, but possibly within another few iterations it could be close enough to save us a whole lot of time going back into our archives and manually geotagging our favorite shots!

(via Petapixel. Stirling Point Signpost image courtesy of studio tdes. Eiffel Tower image courtesy of stevekc. Santa Monica Beach image courtesy of jonathanfh. Milford Sound image courtesy of edwin.11. All images via Flickr, and used under a Creative Commons CC-BY-2.0 license. Images have been modified from the originals.)