Sony mirrorless cameras will soon focus as fast as DSLRs if this patent becomes a reality

posted Tuesday, September 15, 2015 at 2:51 PM EDT

A recent patent publication from Sony shows off a new technology that could improve low-light autofocus performance in mirrorless cameras that use phase-detection or hybrid autofocus systems with the help of uniquely shaped microlenses atop individual pixels.

Before we dive into the details of the patent, we need first to lay down an understanding of the two most utilized forms of autofocus and understand how they relate and can even work alongside one another. If you’re already well-versed in the technical side of autofocus systems, skip down to the patent section.

Phase-detection and contrast-detection autofocus systems

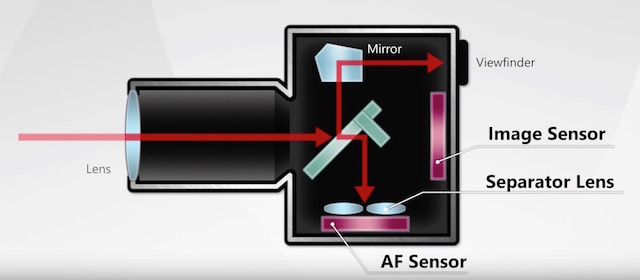

The most common method of autofocus in SLRs is phase detection. As explained in this video from Toshiba, SLRs use a dedicated autofocus system that is almost a camera-within-a-camera. Specifically, SLRs split the light entering the camera through the mirror, onto a smaller mirror that's parallel to the main mirror, which then sends the light through small separator lenses that split the light before it hits a secondary sensor.

The sensor, which is fixed in light sensitivity, then calculates the difference in angle the split light is hitting its pixels and adjusts the lens attached to the camera accordingly, until the separated light rays are hitting the same pixel. The result is an autofocus system that adjusts smoothly from one point to another, as it’s essentially a distance calculator that's trying to get from one point to another.

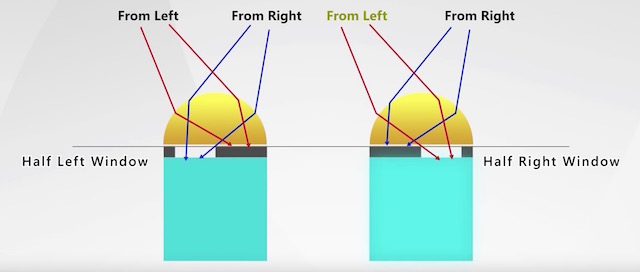

As effective as this technology is, phase-detection autofocus decreases in both speed and quality in low-light situations, especially as pixel-size decreases. This is because phase-detection pixels receive only half of the light hitting them since they need to be shaded. 1

Contrast-detection, on the other hand, is a trial-and-error approach that uses the amount of light hitting the sensor’s pixels to determine at what point of focus provides the most contrast between neighboring pixels. This method is far more resource-intensive than phase-detection, but more accurate, especially on high megapixel sensors where pixels tend to be smaller and light even more scarce.

The problem with contrast-detection is that it requires a camera to ‘overshoot’ the correct focus point to determine when focus is just right. While camera manufacturers have decreased the amount of time and distance it takes to overshoot the focus, it’s still slower and not nearly as effective during video and when a subject is moving towards or away from the camera.

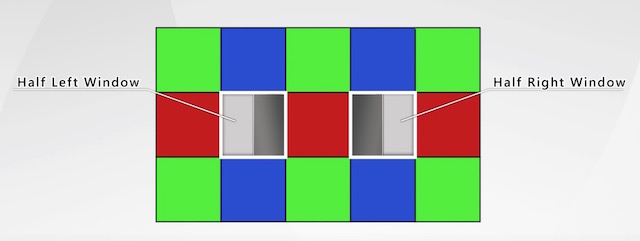

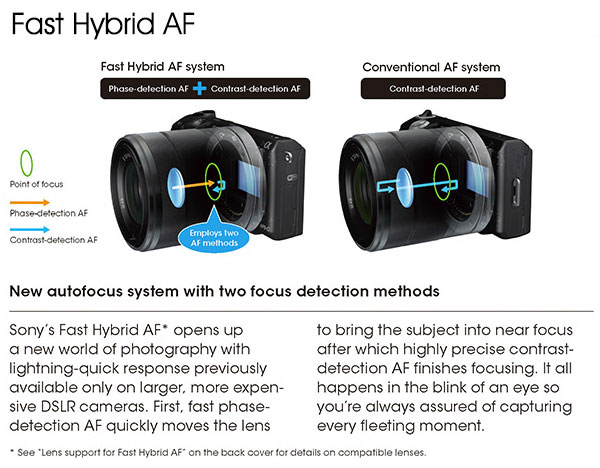

When mirrorless cameras came around, their smaller form factor meant companies would need to build this phase-detection capability into a single sensor, if they were to have it at all (which most did because of how much faster it is than contrast-detection). The result for many, Sony included, was to create a hybrid systems that first use phase-detection to get focus close to the subject, then use contrast-detection for more precise focus. 2

However, unlike SLR autofocus systems that have dedicated autofocus sensors with larger pixels and no filters on top of them, mirrorless cameras must work under the constraints of the imaging sensor that is also used to capture the images. This means the detection pixels that are already light-constrained from being halved in the shading process receive even less light because of the color filter film and light-shielding film placed atop of the sensor’s microlenses.

The patent

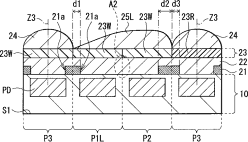

Sony’s patent publication (which means it has been filed and acknowledged, but not yet denied or approved), lays out the groundwork for being able to create unique microlenses with different profiles (shapes), so that the microlenses over the phase-detection pixels could direct light coming only from the desired direction onto the surface of the pixel, without requiring shading. This technology is significant and unique for the fact that the patent implies this would be done on a pixel-by-pixel basis.

This change would give the pixels and overall system much more information to work with in low-light situations.

Could this mean mirrorless cameras might soon focus as well as current SLRs? Possibly. Phase-detection autofocus in mirrorless systems will perform far better with less light than ever before if this patent comes to fruition. However, single-sensor phase-detection autofocus will likely never focus quite as fast as cameras with a dedicated phase-detection systems, especially taking into account that any sensor benefits that work on mirrorless sensors can also likely be used on SLRs' dedicated autofocus sensors.

1 Phase-detection pixels are ’shaded’ to ‘see’ only light rays coming from one side or the other, as it’s required to calculate the distance needed to adjust the focus of the lens attached to the camera.

2 Sony Senior General Manager Kimio Maki spoke about its hybrid focusing technology in a recent interview we published.