Monitor Calibration: Who needs it?

(You, if you care about your photos)Posted: July 2007

Updated: April 2008

Here at Imaging Resource, a lot rides on our judgement of image quality in the cameras we review, so we pay particular attention to monitor calibration and our viewing environments, to avoid introducing even the slightest bias into our evaluations.

It goes without saying that an organization like Imaging Resource needs to keep its monitors carefully calibrated, but what about our readers? Bottom line, it doesn't matter who you are, if you care about your images, you need to care about the condition of your monitor(s). The good news is, monitor calibration is fairly easy, and even a modest investment can pay off big in image quality. If you'd like to read about the monitor calibrator we use here at IR, you can check out our original Datacolor Spyder3 Elite review.

| Which shot is right? Is it the camera or your monitor? | |

|

|

|

|

| With an uncalibrated monitor, you can't tell what exposure is right, and your prints won't come close to matching your screen. | |

Is My Monitor OK?

So how do you know whether your monitor is showing all it should be? To really tell, you need to calibrate it, but there are a couple of simple things to check that'll tell you just how badly a calibration is needed. The test images below will quickly tell you how badly out of adjustment your monitor is. (Note that we said "how badly," not "if:" Unless you're using a very high end display, it's a pretty safe assumption that an uncalibrated monitor is significantly out of kilter.)

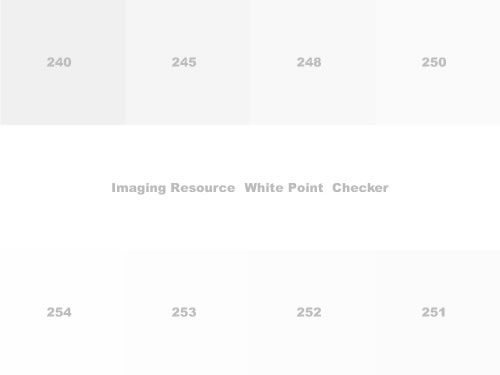

Can you see the highlights OK?

The image above shows eight blocks of grey tints, with a pure white stripe running across the middle. The numbers on each block show the pixel value that block contains. (That is, the block labeled 251 has red, green, and blue pixel values of 251, 251, 251.)

On a perfectly calibrated monitor, you'd be able to distinguish (if only just barely) the difference between the white central row and the block labeled 254. More typically, a "good" monitor would let you see the boundary between the center row and the 250 or 251 block. How many blocks can you see? (Many photo-oriented web sites start their "Is your monitor OK?" greyscale wedges at 245 or so. That's an awfully loose standard, your monitor could be pretty messed up and you'd still be able to see the difference between 245 and pure white.)

How about the shadows?

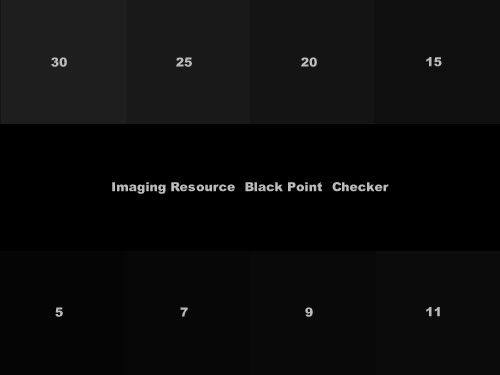

The chart above is similar to the earlier white-point checker, only this time for deep shadows. The center of the image is pure black (pixel values of zero), with the numbers on each of the 8 blocks surrounding it corresponding to the pixel values of the dark grey tints they contain. You'll have a hard time seeing shadow detail if the light's too bright in your working area, so make sure that the room is somewhat dim before performing the check. (The whole issue of proper viewing environment really begs for a whole separate article, one we haven't written yet.)

| A Deeper Look at: Shadows |

|

| Highlight and shadow detail isn't just about black and white: It affects colors too. Learn why. |

In a dimly-lit room, an excellent monitor would let you see the boundary between the central row and the "5" block. A good monitor may not go that far, but should let you see the difference between the "15" block and pure black. Many monitors may only show separation in the 30 block, and quite a few may show no blocks at all. If you can't visually separate at least the "20" and preferably the "15" block from the background, your monitor needs adjustment and calibration. (Do note here though, that room lighting can have a very pronounced effect on how far you can see into the shadows. You may want to adjust your room lighting before you decide that your monitor has shadow problems.)

How's your contrast?

There's more to accurate tonal rendition than just highlight and shadow handling though; contrast is also very important. Computer displays behave differently than you might expect: Being digital, you'd expect each unit of pixel value would produce the same change in brightness. While it would be easy to build computer monitors that did this, it turns out they wouldn't work well for editing photos.

The problem is human eyes aren't very linear. It turns out that our eyes respond much more to changes in brightness than absolute brightness levels. So the same brightness change that appears small in a strong highlight would look enormous in a shadow area.

| A Deeper Look at: Gamma |

| What exactly is gamma? And how did we end up with 2.2 as the standard? Learn more about Gamma |

If we want equal increments in pixel value to produce equal increases in perceived brightness, we need to tweak the way the monitor translates pixel numbers into brightness onscreen. Engineers call the input/output curve that does this a gamma curve, but you and I can just think of it as contrast, since that's what changes in gamma tend to look like to our eyes. Higher gamma numbers mean higher contrast, lower numbers mean less contrast. The sRGB standard used in most computer displays calls for a gamma setting of 2.2.

So how do you tell whether your monitor is set to the correct gamma level of 2.2? It's actually pretty easy. If your monitor is adjusted properly, the pattern below will appear as all the same shade of grey when you view it at some distance from the screen, or if you just throw your eyes out of focus at a closer viewing distance.

The central portion of this pattern is just an alternating series of black and white lines. Half white and half black is by definition 50% as bright as the brightest white your monitor can display. The surrounding gray area is set to a brightness of 186, since that's the pixel value for 50% brightness at a gamma of 2.2. Simple.

So, if you don't see a uniform grey when you look at the image above, your display's gamma setting is off. Fixing this really requires a display calibrator, the "contrast" control on LCDs doesn't actually control the gamma.

OK, my monitor is out of whack: What should I do about it?

Don't feel bad; without adjustment 99.9% of monitors will fail one or more (probably all) of the simple tests just described. What you do now depends on how much you care about viewing your photos accurately. If you care only a little, or are on an extremely limited budget, you can do a visual adjustment/calibration. This approach uses a piece of software (thankfully, either free or already built into your computer's operating system) and your own eyeballs to get your screen looking approximately right. This level of calibration won't be good enough for critical work, but is definitely better than making no adjustment at all. (We hope to post an article soon that will explain how to do this.)

Don't feel bad; without adjustment 99.9% of monitors will fail one or more (probably all) of the simple tests just described. What you do now depends on how much you care about viewing your photos accurately. If you care only a little, or are on an extremely limited budget, you can do a visual adjustment/calibration. This approach uses a piece of software (thankfully, either free or already built into your computer's operating system) and your own eyeballs to get your screen looking approximately right. This level of calibration won't be good enough for critical work, but is definitely better than making no adjustment at all. (We hope to post an article soon that will explain how to do this.)

If you care more about your photos, and can afford to invest at least some money, the best way to go is with a hardware display calibrator and its associated software. Prices for these systems range from a low of about $70 US upwards to several hundred dollars. As you'd expect, you tend to get what you pay for, and there are differences between competing units at any given price.

![]() Here at IR, we spent quite a bit of time experimenting with the various display-calibration solutions on the market, and settled on the products by Datacolor as producing the most consistent results, at least within our environment. There are of course, a number of solutions available from other vendors as well, and you may well find that one of these fits your own needs best. Some high-end display systems also come with their own dedicated calibration systems, and those may be the best choice for use with those displays, thanks to the close connection between the calibration hardware/software and the inner workings of the monitors themselves. The good news is that virtually any hardware calibrator system will produce significantly better results than a simple visual adjustment, so any move you make in this direction will help matters for you.

Here at IR, we spent quite a bit of time experimenting with the various display-calibration solutions on the market, and settled on the products by Datacolor as producing the most consistent results, at least within our environment. There are of course, a number of solutions available from other vendors as well, and you may well find that one of these fits your own needs best. Some high-end display systems also come with their own dedicated calibration systems, and those may be the best choice for use with those displays, thanks to the close connection between the calibration hardware/software and the inner workings of the monitors themselves. The good news is that virtually any hardware calibrator system will produce significantly better results than a simple visual adjustment, so any move you make in this direction will help matters for you.

We're working on an overview of the various monitor calibration systems currently being sold, to help our readers see where they fit into the overall market, as well as reviews of some of the more popular solutions. Given our forever-behind status on the camera reviews that are the bread and butter of what we do here though, we won't make any promises as to just when we'll manage to get any of this posted. :-(

Here's a chart showing the different Datacolor products, explaining the differences between them, to help you decide which version would suit you best.

Spyder3 Feature Comparison

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

If you can afford it, the Datacolor Spyder3 Elite gives the most flexibility and bang for the buck, and is what we use here. If you only use a single monitor, and don't expect to add a second (or third) though, the Spyder3 Pro would be a good choice. The Spyder2 Express is very inexpensive, but we frankly think that most of our readers would be happier with at least the Spyder3 Pro version. Of course, if you're content with the limitations of the Spyder2 Express (single monitor, sRGB only, no white-point pre-tweaking), we certainly won't stand in your way.

Update: See our Datacolor Spyder5 review for the latest version, and order the Spyder5 colorimeter from one of Imaging Resource's trusted affiliates:

- Datacolor Spyder5 Express: ADORAMA | AMAZON | B&H

- Datacolor Spyder5 Pro: ADORAMA | AMAZON | B&H

- Datacolor Spyder5 Elite: ADORAMA | AMAZON | B&H

* L-Star technology is Licensed Property of INTEGRATED COLOR SOLUTIONS INC., Patent No.: 7,075,552 and No. 6,937,249. �2007 Datacolor. Datacolor and other Datacolor product trademarks are the property of Datacolor. All rights reserved.