Interview: Sony’s Kenji Tanaka on why Sony doesn’t worry about competitors, plus AI and AF

posted Thursday, November 8, 2018 at 7:00 AM EDT

I had a chance to catch up with Sony's Kenji Tanaka at the Photokina trade show in Cologne, Germany back at the end of September, and have finally managed to get the transcript of that interview into shape to share with our readers. (Huge thanks to IR Senior Reviews Editor Mike Tomkins, for his help with the transcription and editing.)

I was very interested to speak with Tanaka-san, given the recent entries by both Nikon and Canon into the mirrorless full-frame camera market. Sony's been making hay and winning market share while the two giants seemingly slept, and a lot of the business they've picked up has been at the expense of the two old-line camera makers. So I wondered how Sony viewed their future prospects, now that their two largest competitors had both made credible entries into the market.

While I'd of course expect Tanaka-san to put the best face possible on the situation, I do have the impression that Sony's business has been less impacted by the new entries than it might have been.

Then there's the question of how Sony's sensor division works with competing camera makers, and to what extent the tight coupling between Sony Sensors and Sony Digital Imaging will continue to be a differentiator for Sony's camera business.

Finally, I also got to talk a bit more about autofocus technology and the role AI is playing in that ongoing evolution. It was an interesting conversation, as always. Read on for the details!

Dave Etchells/Imaging Resource: To start out with the most obvious topic, Nikon and Canon are both out with their full-frame mirrorless systems, with varying reactions by different portions of the market. They both have strengths and weaknesses, but it seems to me that in some ways they don't need to be bases-loaded home runs, but simply good enough to keep people from migrating away. How do you adjust for that shift in your business, that there there will be I think significantly fewer people coming from Canon and Nikon now?

Senior General Manager

Business Unit 1

Digital Imaging Group

Imaging Products and Solutions Sector

Sony Corp.

Kenji Tanaka/Sony: Looking at the competitors, I don't care about the competitors. You know, I don't want... Our vision is not to shift the customers from other competitors to our models. Our vision is just to expand the industry.

DE: Yes, I know that's something that you've been focusing on, is growing the market overall. Certainly, though, some of your business has been coming from people who are switching, so I guess maybe the question I should ask is, in your sales growth -- which has really been quite extraordinary -- what percentage has been from people you would consider to be outside the normal industry?

KT: Outside the industry?

DE: Yes; what percentage of your own sales growth has come from the market expansion you're talking about, as opposed to people coming from other platforms?

KT: Ah, other platforms. Yes, of course some customers are coming from other competitors, but this is a secret data, [what] percentage is secret data.

DE: Oh, that's secret, confidential.

KT: Yeah, I can't answer the question, but this is a factor. But I want to say again that our vision is just to increase the total industry, so the customers moving from here to here, that is not what [we think about].

DE: Yes, so your focus is not "How many people can we pull from other platforms?", no...

KT: Our thinking is to let them do their business, how can we increase our own. The industry of full-frame is larger. This is my vision.

DE: Mmm, yeah, yeah. And that's some of your current messaging like Be Alpha, and Alpha Female.

Neal Manowitz/Sony: Yeah. To support Kenji-san's point, what we had seen, before entering the market, we had seen actually the decline in the market. People were holding onto old cameras. So of course they have some brand. But the reality was they weren't, they weren't excited to come in, to reenter the market. I think Kenji-san's point is how do we bring customers back to photography? How do we give them an experience that hasn't been purchased. So did they purchase some other brand in the past? Yes, of course, some camera, but it's not about taking from one brand to the other type of thing, it's about the new experience. And because of that ... actually, that's why the growth is happening in the market.

DE: Ah, yeah, yeah.

NM: So it's actually bringing people in. How do you excite the customer?

DE: So it's not necessarily someone who has never had a camera before in their life. But if they have a ten-year old Canon or Nikon, then they're not really active in the market. They're not, as you say, they haven't been motivated to do anything. So you're kind of reinvigorating and bringing a lot of those people back in again. Interesting, I think that's a good point.

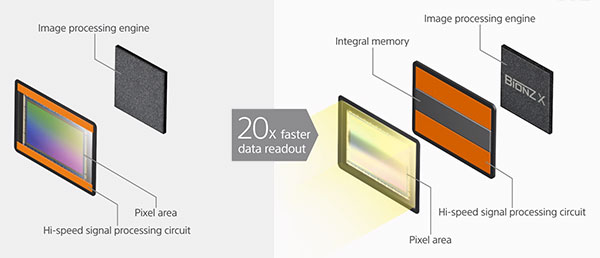

Skipping around a bit in my questions, to make sure we hit the most important ones in the limited time we have... I think the last time we spoke, or it might have been the Kumamoto tour, you were talking about how sensor technology is a really key differentiating factor for Sony. The fact that you can so tightly couple the engineering on the camera side and the engineering on the sensor side; that made a lot of sense to me, especially in the case of stacked sensor technology, because you have to change the whole architecture of the camera system as a whole to really take advantage of that. But at the same time, camera companies are in the business of developing camera architectures, and Sony Sensor wants to sell sensors, so... Will the stacked technology at some point not be a unique advantage of Sony cameras?

[Ed. Note: I'm referring, of course, to traditional camera systems here. Sony is already selling stacked sensors in smaller sizes (1/2.5 - 1/2.6") for smartphones, and started sampling a 1/2-inch stacked sensor last month. On the other hand, even in the case of smartphone camera sensors, I'm not sure if Sony is delivering the full combination of sensor, processing circuitry and memory, as seen in the most advanced sensors they're using in their own dedicated-camera systems.]

KT: First of all, stacked image sensor, the technology is open, so Sony can sell the stacked image sensor for the our competitors. So like Formula 1 cars...

DE: Oh, F1 cars, yes.

KT: Yeah, the stacked image sensor is a engine. If you want to create a Formula 1 car, you need higher performance, and a good sensor, and good handling. Then the driver has to be comfortable in the F1 car. So our Alpha 9 has many technologies, including Sony stacked technology. Of course, if they like, competitors can buy this if they want to create a car. But to create a Formula 1 car is very, very difficult. And controlling it is much more difficult.

[Ed. Note: IR Senior Editor and F1 enthusiast Mike Tomkins pointed out that Kenji-san was likely referring to how engines are handled in the F1 world. Some manufacturers who build their own F1 engines also license them out to rival teams who don't want to (or lack the resources to) make their own.]

DE: And knowing what to do with the technology, that's where you have an advantage of experience for one thing, because you've been making systems using stacked-sensor technology for a while now, and you also have this very close coupling. So you were able to integrate that capability into your overall architecture.

KT: In addition to the good quality, our Alpha 9 has good battery life.

DE: Ah, yeah, got you. Yes, we noticed that the new mirrorless systems seem to have much shorter battery life than Sony's recent models. Interesting.

So at some point, will the competitors (customers of Sony Semiconductor's sensor operation) be able to say with the stacked technology, you know, "I want this much memory, and this kind of a processor here", etc., etc.? How much of that is proprietary to Sony Digital Imaging, versus how much is it part of the toolkit that Sony Sensors offers, do you know?

KT: It is difficult to answer what is proprietary, what part is Sony Digital Imaging and what [belongs to] sensors, maybe.

DE: Mmm.

KT: And we [have developed] stacked image sensor together, so yeah, there quite a lot of assets [there].

DE: So they're very intertwined, and while someone may be able to ask Sony Sensors "I want some memory and a generic processor", it's not so closely integrated.

Expanding on that a little, I think autofocus is extremely important for the future. It seems to me that this is currently the big differentiator, or it's the area where technology has the most room to improve or to grow to meet user demand. And you have some very advanced technology with eye detection. At this show, you even showed us animal eye detection. I'm not sure how to ask the question, but AI is becoming an important part of all of this, for the camera to actually understand what the subject is. What can you say about how you've been developing AI? (This is where I don't know what you're doing, so I don't actually know the questions to ask...)

KT: Yeah. Deeper and deeper things. But... Listen, listen; for AI, people think that AI is the cloud.

DE: Yeah, yeah.

KT: But if we use cloud-based AI, it's very slow.

DE: Yes.

KT: But now we are [looking at] in-camera, AI. Our AI, but in-camera. So our AI chip, we call it edge computing.

DE: Yes, this is clearly an application for "edge" AI tech.

KT: The learning process is very steep and our AI also has a library.

DE: Yeah, with AI, there's two aspects, there's training and then there's recognition. Training takes an enormous amount of computing.

KT: Training is, you know, very hard.

DE: Yes, that's what needs to happen in the cloud, or on a supercomputer.

KT: Yeah. I believe that it [will take place in the cloud] [Ed. Note: background noise on the audio made Kenji-san's specific words hard to make out here; apologies for any unclarity.]

DE: Mmm... So the cameras can control, they can hold (in whatever form it might be) a library of different things that they can recognize. And so a person's eye, or a bird against the sky... all the traditional autofocus use cases. With the new technology though, is there... There must be hardware to support the AI type of processing, because that's different than regular signal processing.

KT: Hmm.

DE: So that's part of your technology stack, is that you have AI dedicated processors.

KT: Yeah, yeah, yeah. The AI processor is totally different from the lower-level signal processor.

DE: Mmm.

KT: Our signal processing is great at just making high-quality images. Our AI processing just has to find a object.

DE: It just finds objects.

KT: Object, yes, it's dedicated.

DE: Dedicated, yeah. So it's a separate chip and...?

KT: No, one chip.

DE: One chip but a separate section.

KT: Yeah. But very high speed. If the AI chip recognized an object in one second with good accuracy...

DE: It's no good, yeah. <chuckles>

KT: One hundred milliseconds, no good. It has to be less than one hundred milliseconds. Ideally, one millisecond.

DE: Wow, so ideally, one millisecond to recognize the object so you can begin the autofocus process. Because you can't start focusing on the subject until you recognize something, yeah. Yeah, so that has to be very, very fast.

This is a very tough problem, too. Back when I was in image processing algorithms and architectures, I wrote a paper that identified a key problem being when you went from what I called iconic to symbolic representations. By that, I meant that you start with just pixels that have no object-orientation associated with them, and from that you have to extract some abstract representation, to translate a random collection of pixels into a structure that says "this is an object". There's a tremendous amount of processing that has to go on in between. I'm thinking that this is where your stacked technology could become very important, that you can put a lot of low-level processing so close to the pixels.

KT: Mmm-hmm.

DE: Is that true? Is some of that very local processing preparing data to feed to the AI? Actually, let me rephrase that: What I'm wondering about has to do with the input for the AI. If the AI has to see all forty-seven million pixels together, it can't cope.

KT: No, no, no.

DE: And so it has to be reduced and you know, they...

KT: Oh, of course, of course.

DE: "There's a line here and there's a line there. Oh, wait now, these lines are connected..." So that low-level stuff, is some of that happening on the processing layer that's stacked with the sensor, maybe?

[Ed. Note: There followed a long, somewhat disjointed discussion at this point that wouldn't make much sense as a transcription, so I'll instead paraphrase and condense it below...]

It seems that my supposition was accurate, namely that a lot of low-level processing is done by the stacked processor circuitry, taking advantage of image data that's temporarily stored in the stacked memory elements.

While the main image processor has a lot of CPU horsepower, it takes a noticeable amount of time to transfer data to it from the image sensor.

While it might be possible to perform a lot of this processing back in the main image processor chip, there's just no way to get the data there quickly enough. And even once it's there, there'd still be a bottleneck between the image processor and its memory. The lowest-level image- and AF-processing involves relatively simple operations on a lot of data. One way of looking at such things is what the ratio is between memory accesses and computation. The dedicated image processor is great at doing very complex computations on blocks of data. So it excels in situations where it can gobble a chunk of data and then do very sophisticated processing on it. In contrast, the lower levels of image processing and AF algorithms involve fairly simple computations, but done at extremely high rates. The key there is to get data to and from the processing circuitry as fast as it can handle it. Since the processing at that level is relatively simple, the bottleneck very quickly becomes memory access, rather than computing ability.

This is where the distributed memory provided by Sony's stacked sensor/memory/processor technology comes in. The image data can be transferred into the stacked memory along thousands of data channels. Then, thousands of simple processors can dive in and crunch it into a more refined, but still relatively primitive form. The results may still be pretty low-level -- here's something that looks like an edge; here's something else that's similar; here's a group of pixels that're the same color; here's a distance-map of the whole frame, etc., etc. -- but the the sheer amount of data being passed to higher levels of the processing stack is reduced by a factor of 10 or more. At the same time, the information that's being passed along is far more meaningful. It's no longer just a bunch of uncorrelated pixels, but rather elements that are meaningful in terms of the scene content.

The thing is (and this was the direction of some of my earlier questions), deciding just what processing is going to be done on the sensor / memory / processor stack vs. in the main image processor is very much a system-level decision. Sony Digital Imaging gets to decide just what they want done where, and how the results will be communicated between the sensor stack and the main processor.

In the case of sensor systems being sold to competitors, though, it'll at some point come down to those companies just accepting what Sony's sensor organization provides. That's not to say that it will in any way be deficient or less capable than what Sony's DI operation is able to access, but there won't (likely) be the same ability to innovate around the partitioning of processing functions between the sensor system and the larger camera system as a whole.

It'll be interesting to see how this plays out over time. While Sony's DI organization is certainly selling a lot of camera bodies these days, it's unlikely that they represent even a significant portion of Sony's sensor output, even just within the purely photographic arena. So it'll clearly be in the interests of the sensor organzation (and Sony as a whole) to help other camera makers take maximum advantage of Sony's advanced sensor integration technology.

(I'd give a lot to know just what conversations between the Sony sensor division and the other camera manufacturers are like, at a deep engineering level. I doubt I'll ever know, but it sure would be interesting to be a fly on the wall in some of those meetings! ;-) Now, back to the interview...

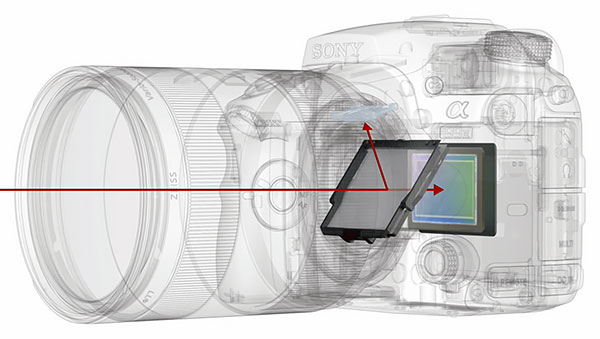

DE: Our time has become very short, so I want to move on to a question about autofocus. Back in the SLR days, we talked about line-type and cross-type AF points. But almost all mirrorless cameras are currently line-type only. Different systems are more or less sensitive in terms of how much off-axis you have to get with single-orientation subject detail before they'll finally "see" something to focus on. But we're wondering, though, because it seems like there's some very basic information to feed to the AF system that's missing, if you can only see detail on one axis. Actually, I first need to ask if I'm correct in my understanding that current Sony mirrorless are line-type, whether your technology will allow or are if you're thinking about also incorporating cross-type?

KT: Of course, yes. Secondly, we can create the cross sensor.

DE: You can create cross-type detectors.

KT: Oh yes, of course. But thinking about the frequency, the phase data frequency, before with separate-types of detectors [Ed. Note: Meaning separate phase-detect sensors, as found in DSLRs, vs on-chip PDAF pixels], the sampling points are very rough.

DE: Yes, very coarse, low-resolution or low-frequency sampling.

KT: So they need a cross-sensor. But right now, you know, on the main sensor, we have a very wide range of frequencies.

DE: Yes, so you can detect or be sensitive to a wide range of spatial frequencies.

KT: So just the line sensor system is enough, almost.

DE: Almost enough, yeah. You can still see situations where if the angle is just wrong, and the subject really has only horizontal detail, then it'll have a problem, but it's a very small percentage.

KT: Yeah.

DE: And you said you can form cross-type points also, but the pixels are either looking left or right. To do a vertically oriented point, would they have to be looking at the two sides of the lens, top and bottom, as well? Because I'm thinking the focus pixels are masked, so some will see light that way <gesturing to the right>, some see light that way <gesturing from the left>. And then you correlate what the differently-oriented pixels see. But for the other direction, the other axis, don't they have to be looking at the top and bottom of the lens?

KT: But, I would say, it's possible to form the cross sensor... Our image sensor can read out pixels one by one.

DE: Yes, individual pixels.

KT: Not the sequence, not just the line sequence of pixels. So we can read out pixels for creating the cross sensor...

DE: Ahhh, hai, hai. Yeah, I was thinking it mattered which direction each pixel is looking, but all you need is light rays coming from any two sides of the lens, to see the different ray paths. And so you can line those pixels up vertically, and even though they're looking left and right, you can correlate along a vertical axis. So yeah, you can form a point in any direction you want, even though the individual pixels are masked so they're seeing light coming from the left and right.

KT: I have no idea if we need cross sensors on our image sensor because the line or individual one is enough, or the...

DE: Yeah, you feel like, yeah, so you're thinking there's no need to go in that direction. You could, but you don't see a need for it.

Good, I think that's probably all we have time for. Thank you very much for your time. It's always, always a pleasure!.

KT: Thank you very much.

So there you have it; Sony's take on the newly-competitive market they're in, how they'll continue to use sensor technology to their advantage, and a bit about autofocus.

What do you think? Leave your thoughts, questions and comments below, and I'll try to respond to individual questions as best I can, between the normal craziness of running IR and yet more upcoming business travel.