Panasonic Q&A at CP+ 2023: S5II/IIx rationale, PDAF and autofocus, Dual Combined Gain and more

posted Wednesday, March 15, 2023 at 5:04 PM EDT

I’m writing this a few days after the CP+ 2023 photo trade show in Yokohama, Japan, sitting happily in a hotel room in northern Japan (Aizuwakamatsu, Fukushima prefecture). It’s just the first of eight interviews with photo-industry executives that I’ll be publishing in the coming weeks. This piece should go up shortly after my return to the US in the second week of March. I’ll try to maintain a reasonable pace for the rest, but they’re all very long articles, and I have a lot of transcribing and editing to do.

This was the first time the show’s been held for three years now, thanks to travel restrictions from the COVID-19 pandemic. That’s a long time in the photo industry these days, with AI “deep learning” technology rapidly revolutionizing camera autofocus and subject-recognition systems and optical design advancing by leaps and bounds.

The show seemed bursting with enthusiasm and positive energy this year, with crowds bigger than I remember seeing in the last pre-COVID session in February 2019. I don’t know how the official attendance figures compare, but it felt like there was a very high level of energy and excitement in the air.

This article is a digest of my meeting with Yosuke Yamane, Executive Vice President, Panasonic Entertainment & Communication Co., Ltd. Director, Imaging Business Unit. Yamane-san is arguably the person most responsible for Panasonic’s recent renaissance, built around their full-frame S models, new L-mount lenses, and cooperation with other members of the L-mount alliance.

Yamane-san always gives an excellent interview, pressing his staff hard at all levels to come up with concise, coherent, comprehensible and complete answers to my questions. (As I mention below, I think his staff groans inwardly a little whenever they see “Dave-san” questions inbound :-)

Without further ado, here’s the account of my conversation with Yamane-san. I’ve set off my own comments and edits in italicized text for clarity.

How’s the market looking, and how is Panasonic doing in it?

DE: The market and Panasonic’s sales were trending upward quite well when we met in late July. How has it done since then, and what do you project for 2023?

YY: In 2022 the global market for digital cameras showed double-digit growth in terms of monetary value due to an increase in photography opportunities following the end of the COVID-19 crisis. In particular, the market was driven by full-frame mirrorless cameras, which are expected to grow by more than 30%. We were also able to achieve double-digit growth in 2022, with our full-frame mirrorless camera line in particular growing faster than the industry.

In 2023 we expect the global market to remain flat, but that full-frame mirrorless cameras continue to replace single-lens reflex cameras and continue to grow. We are determined to go on the offensive by significantly expanding full-frame mirrorless cameras, centering on the new products S5II and S5IIX that will be introduced this month.

I knew that the camera market was recovering, but had no idea it had been this strong! I suspect we’re still below pre-COVID levels, but a 30% year-on-year growth rate is excellent. While I can’t disclose specific numbers that Yamane-san shared with me, it’s also highly significant that Panasonic is outperforming the market. After years of struggle, they now seem to be carving out a very nice niche for themselves, especially in the video space. The new S5 Mark II and S5 Mark II X are clearly contributing to this, as both are very strong products at competitive prices.

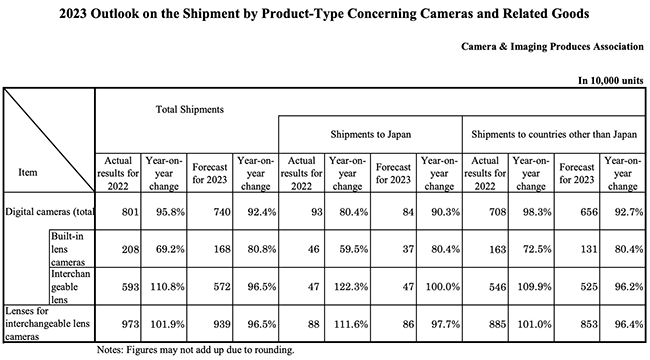

Looking at the table above with CIPA’s projections for 2023, it seems that their overall projections are for both ILCs and lens sales to decline somewhat in 2023. Those figures don’t break out mirrorless models separately though, which is the market segment Panasonic plays in. The table only shows total units shipped, vs. the total value of the products involved. We continue to see a shift to higher-end models, so dollar volume may be increasing, even though unit sales are trending down.

Are you making progress against the S5 Mark II backlog?

DE: I see that some shipments of the S5 Mark II will be delayed due to unexpectedly high demand. (Given its capabilities, I’m not surprised that it’s very popular!) I know you can’t share specific numbers, but can you say roughly by how much orders exceeded your projections? (20%, 50%, 100%?) When do you project that production will catch up to customer orders?

YY: We have received orders for the S5II/S5IIX that far exceeded our expectations in each market, and some customers who have already made reservations could not receive their deliveries on the day of release. We are doing our best to deliver as soon as possible. I can't tell you the percentage or the timing, but we will do our best to fully meet the market demand as soon as possible.

As I said, I wasn’t surprised to hear that there’s a lot of demand for the S5II; it offers a phase-detect AF system that’s long been sought after by Panasonic users, delivers great stills quality and has absolutely top-drawer video capability for the price point. (Only $1,997 US body-only or $2,300 with the new 20-60mm kit lens.) I think that Panasonic themselves were expecting strong sales, but it sounds like the level of demand was way beyond anything they’d expected. Yanane-san didn’t say by how much sales are exceeding their forecasts, but my impression is that it isn’t just a minor factor like 10-20%. I predict that demand will be similarly strong for the forthcoming S5 IIx model. Yamane-san also didn’t commit to an ETA for when their manufacturing would catch up, but you can bet they’re all-in on ramping production as fast as they possibly can.

Why release two such similar cameras? (S5II/S5IIx)

DE: The S5 II and S5 IIX are obviously very similar cameras in terms of features and price. Can you speak a bit to the decision to deliver two nearly-identical models to the market at nearly the same price? Are there any hardware differences between the two cameras at all, aside from the different colorway, or are the differing capabilities due entirely to software? (For example, do they have different processor hardware?) Why are some capabilities of the S5 IIX able to be unlocked on the S5 II via a paid firmware update, but not others?

YY: Under the LUMIX brand tagline of “Motion.Picture.Perfect,” we stand by creators who are particular about creating high-quality works with video and still images, and support them so that they can fully exercise their creativity. This is the pillar of our strategy.

On the other hand, creators are also changing with the times, and the number of creators who edit their own content and send it out through SNS [Social Networking Services, including YouTube] is increasing explosively. We believe that we can help more creators by getting closer to the new "solo-operation creators" who do everything from shooting to editing by themselves.

Our strategy is to serve a wide range of creators in the areas of video and still images, and we will focus on solo-operation creators and support a wider range of users from low-end to high-end, conveying the quality of LUMIX and providing support.

While the S5II is an all-round camera that achieves high performance in both still images and video and is aimed at a wide range of users, the S5IIX is a model that has further enhanced video functions from the S5II. With high-grade video recording modes such as ALL-Intra and ProRes that can also be used by professionals, and video RAW data output via HDMI, as well as features such as live streaming, it targets creators who want to create high-level video content. We have adopted an all-black design for its body to support such production from behind the scenes, and we are also trying to differentiate ourselves in terms of design.

This makes sense to me; as Yamane-san explained in our live discussion, there are really two very distinct markets for the sort of video production the S5II/IIx hardware can support, with their own separate sets of desired features and user interface design. A lot of features needed by high-end professional video producers aren’t needed by all but the highest-end YouTube creator, and including them in the UI would just make it cluttered and inconvenient to navigate. On the other hand, if you do need those functions, it’s only a matter of a couple hundred dollars to go with the S5 IIx version.

The same hardware can serve the two use cases equally well, but the two classes of users have different needs and expectations of the readily-accessible feature set. Hence the two separate models.

As an aside, the “behind the scenes” remark about the S5 IIx’s all-black body refers to the reason why black camera bodies are so common in general: They won’t as easily appear in reflections from subject elements, nor disturb scene lighting by scattering light.

I unfortunately didn’t realize until days after the interview that Yamane-san didn’t answer my last question about differences in upgrade options, but after a little thought, that makes sense to me as well. While similar, the two cameras have different firmware, so making all upgrades available to both platforms would double the amount of code they need to maintain, and could make tech support that much more difficult as well. (“Just which combination and permutation of firmware is the user working with?” would be another confounding variable in solving users’ support questions.)

How did Leica contribute to the S5 II/IIx processor design?

DE: Your launch package for the S5 II and S5 IIX said that the image processing in them represents the first fruits of the L2 alliance. [This was a special alliance announced last year between Leica and Panasonic. It has no impact on compatibility or interoperability within the broader L-mount alliance with Sigma, but is rather a joint development effort between the two companies to leverage their combined strength for common technology elements.] Where specifically was Leica’s presence felt? Did they have expertise relative to image processing algorithms or processor architecture, or was it more something like color management and tone curves?

YY: The engine installed in the S5II/S5IIX is a new engine that Leica and Panasonic worked on together to improve image quality through high-performance image processing. By combining Leica's commitment to image quality cultivated over its long history with Panasonic's innovative digital technology, we have achieved high resolution performance, rich color expression, and natural noise reduction.

We jointly developed the engine for the S5II/S5IIX, but we are continuing to discuss future themes, mainly core devices. Both companies will jointly develop technologies to make their respective products more attractive in the future.

Although it is difficult for us to specifically tell you about Leica's technology, we have jointly developed a product that brings together the image quality improvement technology and know-how of both companies. It is certain that Leica's image quality improvement technology and know-how, which have been cultivated over its long history, are significant.

This was roughly what I expected: Panasonic has a lot of expertise in processor technology. (An example of this is the fact that they were the first hybrid camera maker to offer 4K60p video in-body; I’ve been told that the reason was that they had a leg up in processor technology over the rest of the industry.) On the other hand, Leica has a fabled reputation for color and tonal representation, and apparently had some expertise to lend in the area of noise reduction as well.

The L2 Alliance has been very interesting to me, and I think was a very smart move for Panasonic and Leica to make. The mordern camera market depends so strongly on continuing technological advancements that the idea of two companies involved in largely different market segments working together makes a lot of sense. They basically have twice the R&D resources available to devote to joint projects than either could afford separately. I’m sure the S5II/IIX processor is only the first of many such collaborations; I’m curious what they’ll choose to work on together next.

How has PDAF changed your fundamental AF algorithms?

DE: When I interviewed Yamane-san last summer, he indicated that phase-detect AF was coming soon, and now it’s here in these two new models. It seems to me that PDAF (phase-detect autofocus) will provide initial distance information more quickly than DFD (depth from defocus, a unique Panasonic technology) did, because it only needs a single “look” to get distance information. Has that resulted in changes to your AF tracking algorithms? (It should be a little quicker on initial focus determination, but does it also improve tracking as well?)

YY: Yes, the AF tracking algorithm has been revamped with the S5II.

DFD and Contrast AF [contrast-detect autofocus], which have been refined over the years, achieve extremely high focus accuracy by constantly searching for the focus position while moving the focus lens very precisely. On the other hand, PDAF excels in high-speed performance, allowing the subject's focus position to be determined instantaneously. With the S5II, by combining the performance of DFD, contrast AF and PDAF, we have achieved unprecedented high-precision and high-speed AF tracking performance.

In order to realize high-speed AF with PDAF, it is necessary to move the lens at a correspondingly high speed. The AF system has been designed to show a very fine and agile response for DFD/contrast AF. This lens design and control contribute to further drawing out the high-speed performance of PDAF.

AF is a technology that improves the performance of the sensor, lens, processing engine and algorithm as a whole, and the AF of the S5II is not only equipped with a PDAF sensor, but also thanks to the accumulation of technology so far, it has high performance as well.

I asked some further questions about how they specifically combine PDAF, CDAF and DFD for optimum results. While they obviously couldn’t get too far into proprietary details, it seems that they may use one or another or a couple in combination, depending on the subject, lens, aperture, etc. For some situations, PDAF alone is sufficiently accurate, but other times, adding CDAF or DFD will improve the ultimate accuracy. Contrast-detect autofocus is by definition the most accurate, because it’s actually evaluating the sharpness of the image on the sensor’s surface. CDAF is slower though, because it doesn’t tell you the amount of focus error nor whether you have a front- or back-focused condition. CDAF evaluates the sharpness of the image, makes a small adjustment to the focus setting and measures the sharpness again. If it got better, it knows it needs to keep going in that direction, if it got worse, it knows that it’s passed the point of peak sharpness, so it should go back. DFD can help with this, because the camera can figure out how much out of focus and the direction of change needed from the detailed characteristics of the blur. In any case, in some situations the AF system will use PDAF to rapidly jump to an approximately correct setting, then DFD/CDAF to fine-tune for maximum sharpness. Other times PDAF alone is sufficient.

The bottom line is that PDAF has in fact resulted in a fundamental reworking of the AF algorithm, such that the S5II/IIx can now focus quickly and very accurately, leveraging the best of both methods.

In particular, the explicit distance information provided by PDAF has significantly improved focus-tracking performance for moving subjects.

Will we see PDAF on Micro Four Thirds bodies in the future?

DE: Now that phase-detect autofocus has come to the S series, will we see it in Micro Four Thirds (MFT) cameras as well?

YY: For cameras to be released in the future, we will adopt the optimal autofocus method for each one, while assessing the target users and customer benefits.

We will consider installing PDAF depending on the characteristics of the model, not only for full frame but also for MFT, so please look forward to it.

Companies never comment explicitly on future plans, so this is as close to an absolute “yes” as you’ll ever see :-) I’d say it’s a pretty safe bet that we’ll see Micro Four Thirds bodies from Panasonic with PDAF onboard.

What AF changes have you made beyond just PDAF?

DE: Besides the fundamental differences associated with PDAF as compared to DFD, have your AF and subject recognition algorithms advanced in other ways as well? You explained to me last time that your approach is to concentrate on improving the handling of specific subject types that are important to professional photographers, rather than simply expanding the range of subjects that can be recognized. What areas are you currently focusing on for the next firmware update?

YY: Yes, the advancement of the S5II is more than just PDAF. The AF and subject recognition algorithms have also evolved significantly. We haven't changed the idea of focusing on scenes with people, and we improved it with the S5II. As I said last time, we are particular about improving scenes that are important for professional photographers. (1) Scenes where the subject is approaching the camera directly [these can be particularly challenging, see my comments below], (2) scenes with point light sources such as illumination, (3) backlit scenes such as dusk, (4) low-light scenes such as darkness, (5) scenes for product reviews and (6) scenes with multiple subjects. In those 6 scenes, we raise AF precision.

In backlight or low-light environments, the subject may appear to be blackened at first glance, but the internal algorithm has been revised to recognize the subject even from a very small signal. We have also incorporated an algorithm to identify subjects using distance information, even in scenes with multiple people. In this way, the subject recognition algorithm has evolved significantly with the S5II, but we believe that the needs of professional customers are still diverse.

For example, we understand the needs of recognizing smaller objects and wanting to pinpoint them, and the need of wanting to make a subject in the background stand out while intentionally including a blurred subject in the foreground. In order to be able to capture and focus on the subject even in such a difficult environment, we will continue to take on technical challenges.

When I last spoke with Yamane-san, he described their strategy as focusing (no pun intended ;-) on the kinds of subjects most important to professional photographers, specifically scenes containing people. This time he expanded on that with six additional scene or subject types, as described above. I’ve heard many companies discuss the range of subject types and use cases they concentrate on for AF development, but Panasonic seems unique in their focus (sorry, I can’t get away from that word) on the needs of professional/commercial photographers. Some of these use cases will of course extend to amateurs as well, but what you don’t hear them talking about are things like birds in flight or racecars. Rather than trying to cover the entire range of possible subjects, they’ve explicitly concentrated their efforts on the needs of professional shooters.

This makes a lot of sense to me, especially given Panasonic’s place in the market. They’ve previously been very much an underdog, with a relatively small market share. Rather than trying to be all things to all people, they’ve clearly identified their target customer (professional photographers and videographers) and have brought all their resources to bear on satisfying the needs of that group.

This strategy seems to be working very well for them, as witnessed by the success of the S5 II/IIX and the fact that their growth is significantly outpacing the market as a whole.

A technical side note about why subjects approaching the camera are particularly challenging: When tracking a subject, what matters most is how much the focus needs to change between shots. Note that what actually matters is the relative focal distance change from one frame to the next. This means it’s a logarithmic process: The relative difference between 20 and 25 meters is much less than the difference between 5 and 10 meters. So as a moving subject gets closer and closer to the camera, a bigger and bigger focus adjustment is needed between successive shots. A basketball player at the far end of the court is much easier to focus-track than one that’s practically on top of you. I’m sure every camera maker is working on this challenge, but again, this was the first time I’ve heard it explicitly expressed.

Another quick side note about subjects in the background with blurred foreground elements: It’s long been a heuristic shortcut for AF systems to assume that the object closest to the camera will always be the subject. This is why wide-area AF is often confused by foreground objects, forcing you to switch to spot-AF. Panasonic wants its cameras to be able to intelligently recognize when the subject is in fact in the background.

Is there any chance the S5II’s AIAF could come to existing models?

DE: AI is now an essential part of AF and subject recognition. Presumably the S5II models are taking advantage of improvements you’ve made in your machine learning algorithms since the last model was released. Will these improvements be available to earlier bodies as well via firmware updates, or do they depend on new hardware in the S5II’s processor?

YY: Subject recognition by AI has high recognition performance and is a very important technology that is constantly evolving. With the S5II, along with the evolution of the AI algorithm, the required performance was achieved by installing a new engine that can perform very high-speed calculations. We will continue to study adaptation of the algorithms to previous bodies, but the engine’s processing performance itself is different, so it is difficult to implement AI performance equivalent to S5II. We hope that you will consider the S5II to be completely new in terms of its AI performance and distance measurement performance with PDAF.

This was pretty much what I’d expected: The new processor in the S5II/IIX has significantly more advanced hardware, likely including dedicated neural-net processing capability that simply isn’t present in earlier models.

Taking advantage of the dramatically enhanced hardware, Panasonic developed completely new autofocus and subject-recognition algorithms that just hadn’t been possible previously. As Yamane-san said, the S5II represents an entirely new generation of AF capability for Panasonic.

Bottom line, there’s really no way to bring anything approaching the S5II/IIX’s subject recognition and AF capability to earlier models.

Why is Dual Combined Gain used in the GH6 but not other models?

DE: This is the first of two reader questions: Why was combined dual gain chosen for the GH6 instead of the dual native ISO way we see in other cameras, including the GH5S?

This ended up being a very long and complex answer that involved Yamane-san going out to the booth floor to bring back a sensor engineer to dive into the details with me. (This is one of the great things about interviewing Yamane-san: He presses his staff very hard to come up with solid, concise and accurate answers to my questions, often asking for multiple revisions to their initial answers when I send over my questions. I think his staff groans a little whenever they see a set of “Etchells questions” coming over the wire! :-)

It turns out the answer was somewhat as I expected, although I think the situation is in fact the reverse of what’s stated in the question: The GH6 actually implements dual native ISO, whereas the S5 II/IIX (for instance) uses combined dual gain.

The short version of the answer is that Dual Native ISO gives better noise performance and dynamic range at high ISOs, but at the cost of a good bit of added complexity in the pixel-readout circuitry. Combined dual gain helps with dynamic range, but doesn’t do anything for noise levels. The full explanation is a little technical, though, so bear with me. Here’s what you need to know about how image sensors need to work.

First, pixels turn incoming light (photons) into electrical charge (electrons).

The sensor needs to turn the charge into a voltage so it can be measured by the A/D converter.

This is done by dumping the charge onto a capacitor. A capacitor is simply an electronic component that stores charge. When you pump charge into a capacitor, the voltage across it rises. The relationship is simple, V = q/C, where V is voltage, q is charge, measured in coulombs, and C is the size of the capacitor, measured in farads. (Geeky side-note that just about no one will care about <grin>: Coulombs and farads are very large quantities. A coulomb is about 6 x 1018 electrons. That’s 6 times a billion, billion electrons. If you dump a coulomb of electrons into a 1-farad capacitor, the voltage across it will rise by just one volt. An amp of current is one coulomb per second, so if you routed a 1-amp current into a 1-farad capacitor, the voltage would increase by one volt per second.)

As I just mentioned, sensors read out a pixel’s charge by shifting it onto a tiny capacitor before sending it to an amplifier circuit and then on to the A/D converter. The capacitor is sized so a completely full pixel’s worth of charge will result in the maximum voltage that the A/D converter can measure without saturating.

When you’re shooting at higher ISOs though, the amount of charge in the pixel is much, much less than at base ISO. (For instance, in a sensor with a base ISO of 100, the pixel wells are at most only 1/16 full when shooting at ISO 1,600.) This results in very small voltages on the readout capacitor, so the signal is smaller relative to noise voltages elsewhere in the system (eg, in the amplifiers or A/D converters).

You can improve high-ISO performance somewhat by simply using a smaller capacitor to read out the charge. A capacitor ¼ the size of the regular one will result in readout voltages 4x higher. This means the image signal is boosted 4x, while the noise from the amplifiers and A/D converters stays the same - so the relative noise level is ¼ as much as if you’d use the full-size capacitor we first talked about.

Dual-native ISO means that each pixel has two capacitors in it, a large and a small one. When shooting at lower ISOs, the larger cap is used, so the camera can record bright scenes without saturating or blowing out the highlights. When you’re shooting at higher ISOs though, the camera tells the sensor to switch and use the smaller cap, boosting the signal level and reducing the effect of noise elsewhere in the system.

While the benefit isn’t exactly 1:1, dual-native ISO sensors produce lower noise levels (and thus also better effective dynamic range, thanks to cleaner shadows) than conventional ones, but the tradeoff is that the pixel cell design becomes a good bit more complex, and manufacturing yields are as a result somewhat lower. This increases the cost. There may well be secondary limitations to the dual-native ISO approach, such as slower readout speeds or some such, but that’s beyond my knowledge.

The GH6 uses this approach because the high-ISO noise of its sensor was already quite good, but its relatively small pixels resulted in lower dynamic range. The Dual Combined Gain approach it uses above ISO 800 significantly improved its dynamic range (Panasonic rates its dynamic range at more than 13 stops), while also slightly improving high-ISO noise in the shadows.

The combined dual gain approach is rather different and a little simpler in terms of the pixel circuitry itself. Rather than using two capacitors, the sensor has two separate sets of readout amplifiers, with lower and higher gains (amplification factors), and the camera can choose which one it wants to use the data from. In bright regions, the low-gain amplifier delivers a usable signal, but the high-gain one is saturated. In the shadows though, the low-gain amplifier’s signal is very small, so the output of the high-gain amp is more useful.

The use of two separate amplifiers can have a small impact on noise, but the main benefit is that it moves shadow information higher into the A/D converter’s range, so there are more usable bits of data coming out of it. With more bits of data available, you can avoid quantization effects in the deep shadows (basically the shadows become smoother), and the camera’s noise reduction algorithms have more to work with and so can produce better-looking results.

So dual combined gain sensors can deliver higher effective dynamic range by producing cleaner, more detailed shadows, but there’s less impact on high-ISO performance than dual-native ISO provides. They are however simpler and easier to produce.

With big fat full-frame pixels like in the S5II/IIX, high-ISO noise is less of an issue than with the smaller pixels on a MFT camera like the GH6, so there’s not as much need for dual-native ISO to improve high-ISO performance. Simply going to a dual combined gain approach will deliver great dynamic range, on top of the sensor’s already excellent high-ISO capability.

As noted above, Dual Combined Gain met the design goals of the GH6 by giving it more than 13 stops of dynamic range when shooting at ISO 800 and above. By contrast, the larger pixels and low noise of the S5 Mark II’s sensor provided good dynamic range to begin with, while its Dual Native ISO pixel circuitry makes for very clean images and video at high ISO settings.

Yamane-san noted that the S5II’s dynamic range performance has been very well received by the market, without needing the Dual Combined Gain technique employed in the GH6.

What’s up with Lumix Pro Services (LPS) in Europe?

DE: Here’s the second reader question (I think he’s located in Europe): Why was Lumix Pro Services (LPS) scaled down in Europe? This included closing the programme in many countries completely, and completely disqualifying MFT bodies from being eligible. What are the plans for LPS in Europe going forward?

YY: The LUMIX Pro Service in Europe will not be completely shut down, but will remain fully operational for LUMIX users. We will always face our users and respond to their problems.

I’m not located in Europe myself, so can’t speak to any of this personally. From the response above and our in-person discussion, LPS coverage in the EU hasn’t by any means ceased. I didn’t think to press for a very specific answer about the individual countries, but I guess it’s possible that they’ve consolidated service centers there. They were quite adamant that LPS is very much alive and well in the EU and strongly focused on the needs of their current users.

That’s the key point though: “current” users. Panasonic’s professional user base and especially the gear they use has changed dramatically since the full-frame S series cameras were launched.

Once upon a time, all Panasonic pros had were Micro Four Thirds bodies, which were sought after by them in part for their excellent video capabilities at low price points. Once the full-frame lineup launched though, these professionals rapidly migrated to the new platform, with the result that there are far fewer MFT pro users in the EU than there were just a year or two ago.

No company has infinite resources, so you always have to pick your shots, and concentrate available resources where they’ll do the most good for the most people.

Providing high-level LPS support for both MFT and full-frame would involve a very significant cost, stemming from the need to maintain a large parts and loaner inventory for MFT that’s used by a smaller and smaller number of end-users. They might be able to cut back a little on parts and loaner inventory, but that’s just the tip of the iceberg. From inventory to logistics to training, supporting two entirely separate product lines would just be prohibitively expensive, given that the vast majority of their pro user base has migrated to the full-frame line.

I can understand MFT pro users feeling a little abandoned, but I equally understand the unfortunate reality that it just became impossible to continue LPS support for what’s become a very small part of their user base.

Will YouTubers switch to the S series, or will the GH line continue to be popular?

DE: A lot of YouTube videos have very high production values these days. Is that market shifting towards higher-end products like the S series full-frame cameras, or do you see products like the GH series continuing to be the camera of choice in that market segment?

YY: As I mentioned earlier, there is an explosive increase in the number of new “solo-operation creators” who edit their own content and send it through SNS, doing everything from shooting to editing alone. We believe that it is our DNA to serve a wide range of creators in the field of photography and videography.

We expect that YouTuber's needs for cameras will expand widely from high-end to mid-range, so we have two systems with different features (FF and MFT) to provide the best system for creators. We aim to help a wide range of creators express their vision.

There’s obviously a very wide range of YouTube creators. Some channels have huge teams and very sophisticated video production, while many are one-person shops with a single camera.

Panasonic sees their market as the mid- to high-end of that spectrum. At the low end, a cell phone and a selfie stick are all that’s needed, while mid-range users will appreciate the compactness and affordable capabilities of the GH line, and the high-end videographers will migrate heavily to Panasonic’s full-frame products.

While Yamane-san didn’t give any explicit numbers, my impression was that their MFT models are in a very solid position, and they expect growth and increased penetration of them into the mid-range YouTube market.

Where are we going with AI-based image enhancement?

DE: This is a question I’m asking all of the manufacturers: In the camera market, we’re seeing AI-based algorithms used for subject recognition, and to some extent scene recognition, but not for manipulating the images themselves. I’d characterize all these as “capture aids”, in that they more or less assist the photographer with the mechanics of getting the images they want, but they don’t affect the character or content of the images themselves. (HDR capture being an exception.) In contrast, smartphone makers have used extensive machine learning-based image manipulation, strongly adjusting image tone, color and even focus in applications like night shots, portraits (adjusting skin tones in particular) and general white balance. I think it’s going to be critical for traditional camera makers to meet the image-quality (or perhaps more accurately “image-appeal”) offered by the phone makers. This feels like a distinct disadvantage for the camera manufacturers in the competition with smartphones. What can you say about Panasonic’s strategy and work in this area?

YY: Images and videos taken with a smartphone will have the same image quality regardless of who takes them.

The characteristics of system cameras are that the taste and skill of the photographer is always reflected in their work. While preserving the joy of taking pictures, we make cameras with the hope that people will experience the joy of creating content with their own hands.

Unlike images and videos created by smartphones, I think the key to the survival of cameras is to keep the essence of the camera, which is the creation by oneself.

This was almost universally what I heard from all the camera makers. “Phones take away your creativity of expression, cameras support it.”

There’s certainly an element of truth to this; the “look” of a smartphone photo has much more to do with whether it’s an iPhone or Samsung product (or even which generation of either you're using) rather than the person tripping the shutter.

You’re certainly free to compose the shot however you like, and many phones provide both pre- and post-capture adjustments, but it’s definitely the case that phones take on much more of the responsibility for what the image looks like.

Speaking for myself though, while I often do want to have full control over every detail of an image, there are also a lot of times when I’d like the camera to get me part of the way there. Particularly in difficult or mixed lighting, modern smartphones do an amazing job of balancing tone, color and contrast. It would be great to have a partly-processed, smartphone-like image to use as a starting point, rather than a completely unadjusted image.

Just like shooting controls that let us choose options contrast, sharpness, white balance, saturation and hue pre-capture, why can’t there also be AI-driven modes that we can then choose how much or how little we want to apply to the photos we’re shooting? Why not have an “AI Night Scene” parameter that we could choose settings ranging from -2 to +2 when shooting?

And it’s not like having these adjustments for JPEG images would remove our ability to work from the unadulterated raw image either: After all, that’s what RAW files do relative to the just-mentioned adjustments for contrast, sharpness, etc.

Given that we’d presumably always have original RAW files to go back to, I don’t see any downside to AI-based image adjustments, and doubt many of my fellow photographers would see them as anything but a positive.

Camera makers are in a tough spot with this sort of deep-learning application though, in that the smartphone makers have R&D budgets two or more orders of magnitude bigger than anything conventional camera makers could dream of. Camera makers are doing an excellent job with subject recognition and focus tracking, as they have huge libraries of images to work with, from years of work on AF algorithms. AI-based image tweaking is another whole area though, needing every bit as much R&D effort as is currently being applied to AF advancement.

Despite the manufacturer’s current reticence, I think that AI image enhancement will have to come at some point, and I predict it will happen sooner rather than later. Despite their strong line about leaving all creative control to the photographer, I’d be very surprised if most camera makers aren’t also exploring computational image enhancement as well…

Summary

As usual, this was a great interview with Yamane-san; the collective time and thought spent on the questions by his staff showed in the quality of the answers. I think the biggest news for Panasonic users is that the overall market is doing very well, and Panasonic is doing even better(!) Years of struggle and effort seem to be really paying off for Panasonic these days. As strong as the general camera market is right now, Panasonic’s business is growing even faster. The key is their very full-featured and rugged yet affordable full-frame bodies, especially the S5II/IIX.

Panasonic has always been very strong in the video arena, but their full-frame models have kicked things up a couple of notches. Particularly important is the way models like the S1H, BHG1 and BGS1H can match the output characteristics of their high-end Varicam cameras, and that all three models have been approved for use in Netflix-destined video production. This has truly opened the floodgates among pro videographers. At the same time, still image quality has been excellent as well, and the PDAF-equipped S5II/S5IIX will open up a lot of previously-challenging applications for the company.

The advent of PDAF in their product line has meant a complete revamping of their AF algorithms, which now take advantage of the best characteristics of CDAF, DFD and PDAF, as the subject requires. PDAF gives them greater AF speed, particularly when tracking moving objects, while DFD and CDAF bring increased precision. The camera uses a complex algorithm to decide which to use when and how to best leverage the strengths of each method. Yamane-san underscored in our side-discussion that we should view the S5 II/IIX as an entirely new generation of AF.

While the S5 II and S5 IIX share common hardware and a lot of capabilities, Panasonic developed two separate models to meet the needs of mid-range and professional users at the same time. The S5 IIX provides features and a user interface appropriate to professional videographers that would be burdensome to high-level amateurs. By making two models, Panasonic could uniquely address the needs of each sub-market.

In response to one reader question, I learned a lot about the reasons for the choice between Dual Native ISO and Dual Combined Gain in different cameras. The response to another clarified and explained what’s going on in Europe with Lumix Professional Services (LPS). Changes in policies there reflect the significant shift in the equipment choices of Panasonic’s professional user base.

Finally, in line with essentially every other manufacturer I asked the question of, Panasonic doesn’t see AI-based image-enhancement processing as something that photographers are looking for them to provide. Their core philosophy is to keep creative control solely in the domain of the photographer rather than AI-based algorithms that may have unpredictable results. (For what it’s worth, I personally think the industry is going to have to come around to the idea that many photographers might appreciate at least some AI help with tricky lighting and white balance situations, but Panasonic’s position is very much in line with everyone else I talked to.)

As always, my interview with Yamane-san was very informative: Many thanks to him for his commitment to clear, detailed answers and to his staff for doing the background work needed to provide them.

I left the conversation with the feeling that after years of struggle, Panasonic is on a clear path to success in their chosen market segments. I’d long felt that the unsung capabilities of Panasonic’s cameras were one of the deep secrets of the industry. It’s nice to see them finally enjoying the fruits of their long labor, and the benefits of combining PDAF with their unique DFD/CDAF approach to autofocus. The overall message from Panasonic is one of growth, strength and high user acceptance. I’m looking forward to whatever they come up with next!